Discord recently launched a new age verification system to comply with stricter online safety laws, particularly in the UK. The system requires users to verify their age through either a government ID scan or an AI-powered facial recognition test. However, this supposedly advanced security measure has already proven remarkably easy to bypass.

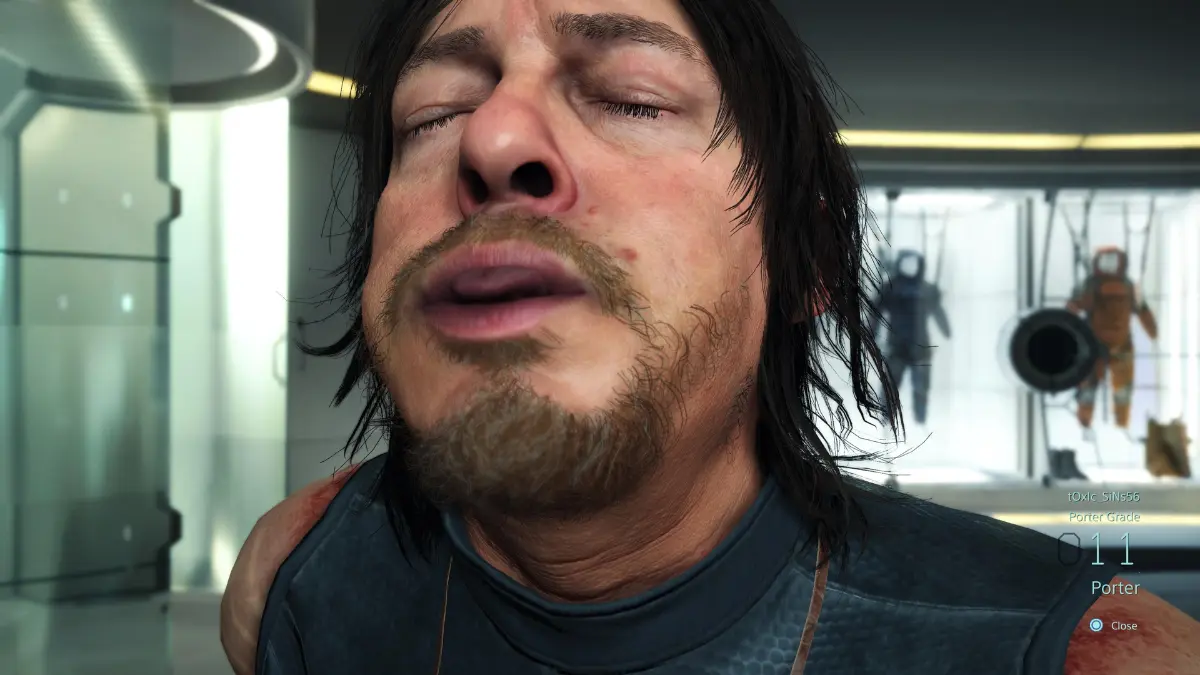

Users quickly discovered major flaws in the facial recognition option. Instead of scanning their own faces, some successfully tricked the system by holding up images of video game characters, magazine covers, or celebrity photos. One user reported that their friend defeated the verification by showing a magazine cover and using their fingers to mimic a mouth opening and closing during the interactive portion of the test.

The verification process takes place through a third-party provider linked from Discord. Users can choose between scanning their ID or completing a facial recognition check that sometimes includes interactive elements like turning their head or opening their mouth. Many users, especially those from countries like the UK without standardized ID systems, report that ID scanning frequently fails, pushing them toward the easily-fooled facial option.

Even when used as intended, the system shows alarming inaccuracy. Multiple users in their 30s report being incorrectly flagged as under 13 years old, locking them out of their accounts or restricting features. This suggests fundamental flaws in the AI’s ability to accurately determine age from facial features.

Privacy concerns add another layer of worry. The collection of biometric data and government IDs by a third-party service raises questions about data security and retention policies. Recent data breaches at similar verification services have heightened these concerns, with users questioning whether Discord’s implementation prioritizes actual security or merely ticking regulatory compliance boxes.

The new system comes as Discord responds to mounting pressure from legislation like the UK’s Online Safety Act, which requires stronger measures to protect minors online. Tech companies failing to comply can face significant fines and legal consequences, explaining Discord’s rush to implement verification.

When AI goes wrong

Security experts point out that Discord’s implementation appears designed primarily for legal compliance rather than effective age verification. The system’s vulnerability to deepfakes and AI-generated images further undermines its reliability, suggesting that determined underage users could still easily gain access to restricted content.

Discord has yet to comment on these widespread reports of verification failures or address concerns about the system’s technical shortcomings. As users continue to document exploits and express frustration, the platform may need to reconsider its approach to age verification or improve its current implementation.