With more than 70 million daily users, Roblox has become one of the most influential playgrounds for children in the digital age. And though its accessibility made it a cultural touchstone for younger generations, it also opened the door to more sinister activity.

Today, Roblox sits at the center of a storm. Reports of child exploitation have surged, lawsuits are piling up, and even figures like Chris Hansen are reportedly investigating the company’s safety record. At stake is more than just Roblox’s reputation: the safety of tens of millions of children depends on whether the platform can balance its open-ended creativity with meaningful protection.

The free-to play paradise

The core appeal of Roblox is simple: a vast library of free, user-created games where kids can jump from racing simulators to social hangouts without asking their parents to break the bank. Virtually every popular game has a Roblox knockoff, from Call of Duty and Dead by Daylight to Minecraft and even Mario 64.

The quality of these clones varies wildly. Some are great, others so bad the original devs are telling users to just pirate their game instead of playing a “microtransaction-riddled slop ripoff,” as the PEAK team recently put it. Still, the sheer fact that so many beloved games are available in some form for free is pretty astonishing.

PEAK dev responds to Roblox copy 'CLIFF' after it surpasses 163,000 plays

— Dexerto (@Dexerto) August 5, 2025

"Would rather you pirate our game than play this microtransaction-riddled slop ripoff" pic.twitter.com/wgX818iG70

If I were 12 again, the very idea of Roblox would have blown my tiny mind. No need to save for months just to buy a new title, just hop on and try whatever you want. It feels like infinite potential, especially since you can even make your own games, which can be quite complex.

Accessibility drives that appeal too. Beyond consoles, Roblox runs on phones, tablets, and modest PCs. Layer on the social side—chatting with friends, customizing avatars, showing off digital items—and it becomes less a gaming app and more a cultural space where kids spend hours every day.

Roblox’s popularity in numbers

Countless children happen to do just that, with Roblox Corporation estimating its game is played by half of Americans under 16. Globally, the company reported 111.8 million daily active users and 380 million monthly active ones in Q2 2025. So, about as many people play Roblox daily as there are PlayStation 5 and Xbox Series X/S owners combined.

In late August 2025, Roblox made gaming history with 47.3 million concurrent players—meaning roughly one in 172 people was playing at the same time. “Meaning” is doing some heavy lifting here, since countless bots are surely inflating that figure. But the same caveat applies to Steam, whose all-time record of 41.2 million concurrent users was set back in March.

Roblox has thus become the default option for young gamers not because it’s the highest-quality experience, but because it’s the easiest to access, the most flexible, and the most social. Given its popularity, predators looking for targets know they’ll find a massive audience there.

Roblox’s growing pedophile problem

Arrests tied to Roblox child predators go back years, and actually seem to be climbing despite the ever-increasing public awareness of the issue and the resulting scrutiny.

Between 2018 and 2024, Bloomberg Businessweek tallied at least 24 U.S. arrests for abducting or sexually abusing children groomed via the platform. That comes out to four per year. In contrast, at least six people were arrested over allegations of using Roblox to prey on children in the first half of 2025 alone.

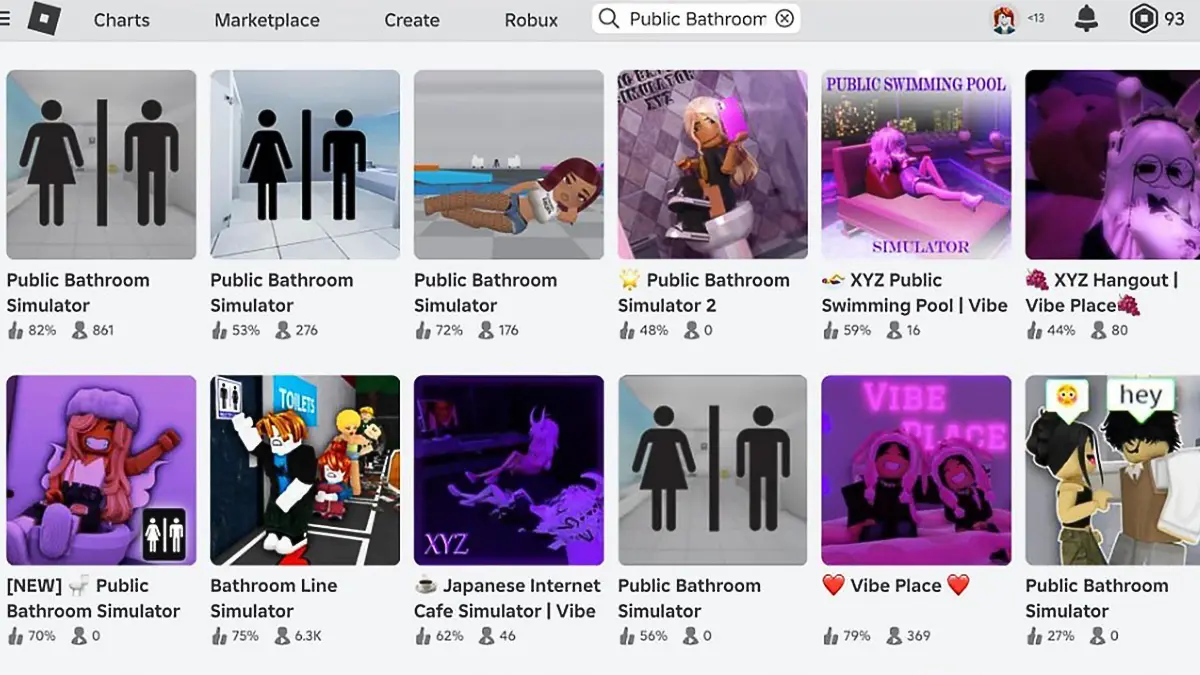

Early “condo” and hangout games exposed a significant moderation gap on the platform. Long before today’s stricter age gates, Roblox was dogged by reports of sexual role-play spaces and notorious one-offs like Public Bathroom Simulator, where people were found to be play-acting sexual assault.

Moderation always existed in some shape or form, but critics called it reactive and easy to evade. Roblox has long paired human moderators with automation (including AI tools, as of late) and parental controls, yet reporting shows offenders routinely dodged filters and shifted chats to apps like Discord.

Suspected child exploitation cases on Roblox are growing

NCMEC CyberTipline data self-reported by Roblox jumped from 675 cases of suspected child sexual exploitation in 2019 to 2,203 in 2020 and 24,522 in 2024. That’s a 3,532% increase.

While the pandemic boosted the popularity of all stay-at-home activities, video games included, the surge in reported cases highlights how inadequate Roblox’s child safety practices had been rather than reflecting a sudden shift after 2020. Those thousands of additional suspected child sex offenders didn’t suddenly appear five years ago—they were just flying under the radar.

Roblox‘s popularity among children is likely a big reason predators flock to the platform. But it may not be the only one, with some critics previously accusing Roblox of encouraging illicit behavior with its lax moderation standards.

Take Public Bathroom Simulator, for example. The game sparked a massive trend of bizarre Roblox titles where kids were seemingly encouraged to role-play sexual acts, either with other children or with adults posing as kids.

The original Public Bathroom Simulator finally shut down in June 2025, but not before its creator rejected the idea that the game contributed to the culture making Roblox a potentially unsafe environment for children.

“I tried moderating, but I’ve realized it is a huge waste putting any more effort into a game where the majority playerbase want [sic] to break the rules no matter what I do,” the creator wrote back in May.

If the historical numbers painted a grim picture, the headlines of 2025 have made things look even worse. Roblox is now facing lawsuits, vigilante controversies, and mounting pressure from politicians and the media—all at the same time.

States strike back

In August 2025, Louisiana’s attorney general sued Roblox, accusing the company of failing to protect children from predators who used voice-changing tech and weak age verification to groom minors.

The complaint framed Roblox as a “hunting ground” and argued the platform’s safety tools are inadequate despite its massive under-13 user base.

One of the Public Bathroom Simulator clones, titled PBS Vibe, was cited in the lawsuit as evidence that Roblox allows sexually explicit experiences that can be accessed by anyone.

Games with descriptive names like “Diddy Party” and “Escape to Epstein Island” were mentioned in the same context by the suit. All of them have been removed from the marketplace as of early September 2025. In fact, Roblox seems to have nuked anything mentioning the keywords “condo,” “diddy,” or “epstein.”

The Louisiana case appeared almost in tandem with a lawsuit filed by a Georgia family alleging their nine-year-old was extorted by a Roblox predator.

In Florida, a 400-case group lawsuit includes the story of an 11-year-old girl who was kidnapped and assaulted after being groomed on Roblox before the offender shifted the interaction to Discord.

Vigilante Hunters, Chris Hansen, and the Schlep Saga

The public pressure on Roblox hasn’t just come from lawyers, as the platform also found itself targeted by vigilante predator-catching content creators.

The most famous recent case is Andrew “Schlep” Michael, a YouTuber who posed as children on Roblox and collaborated with police, helping secure at least six arrests. Roblox Corp responded by banning Schlep and serving him with a case and desist letter in August, threatening to sue if he continued his activity.

The company said Schlep’s actions violated its Terms of Service, citing rules against impersonation, entrapment, and using the platform to simulate child endangerment. It argued that even well-intentioned vigilante operations risk exposing real minors to unsafe interactions and can interfere with proper investigations.

The story drew national attention and even the backing of To Catch a Predator host Chris Hansen, who teamed up with Schlep on a documentary about Roblox’s grooming problem.

Banning the good guys?

Roblox maintains that child safety work should be handled by its own moderation team in partnership with law enforcement, not by independent YouTubers creating sting content in pursuit of profit.

Encouraging or even just allowing such activity would lead to even more attempts at vigilante justice, something that the company is strongly against, arguing it complicates its own efforts to combat child predators.

Schlep isn’t just any content creator, though. He himself was a victim of child grooming on the platform, which he experienced between the ages of 12 and 15.

Now 22, Schlep says his groomer was a partnered developer whose merchandise was distributed by Roblox Corp nationwide. That personal history fuels his drive to expose predators.

The YouTuber’s ban prompted a bevy of strong online reactions, including an anti-Roblox petition started by Congressman Ro Khanna. Supporters argue that Schlep’s motives clearly go beyond chasing “content” and that banning him shows Roblox Corp is more inclined to punish people drawing attention to its problems rather than addressing the issues themselves.

Whether Roblox’s reasoning for retaliating against Schlep makes sense depends on how much faith you have in its safety systems—systems that, by the company’s own numbers, still flag tens of thousands of potential exploitation cases each year and missed nearly all of them until just a few years ago.

The platform’s defense

Asked to comment on its current efforts to crack down on predators in its virtual playground, Roblox Corp provided the following statement to Spilled:

We work toward the highest standards of safety for our users and our safety features and policies are purposely stricter than other platforms. Our 24/7 moderation system continually monitors for both inappropriate content and bad actors to help prevent attempts to direct users off platform, where safety systems and moderation may be less stringent than ours.

Roblox Spokesperson

For instance, Roblox limits chat for younger users and does not allow user-to-user sharing of images or personally identifiable information. We review abuse reports and take appropriate action, including banning accounts and, when warranted, proactively reporting to law enforcement.

In partnership with law enforcement and child safety organizations, we continuously strengthen our safety policies to adapt to bad actors’ evolving tactics, launching over 100 new safety features just since January. While no system is perfect, our goal is to always improve and lead the industry in child safety.

Recent verifiable data supports Roblox Corp’s claim that it’s consistently rolling out new measures to enhance child safety.

The number of incidents that the company self-reported to the National Center for Missing and Exploited Children has been steadily rising for half a decade now, and that trend is now aided by AI-powered safety tooling like Sentinel. Since launching in late 2024, the early warning system flagged around 1,200 potential child exploitation attempts on Roblox.

The tech keeps improving too, which is encouraging given that predators themselves seemingly haven’t grown much more sophisticated since the early days of chat rooms, they’re just changing platforms because the kids are. Now they’re up against prevention mechanisms that may be too complex to even understand, let alone bypass.

This push toward heavy-duty moderation assisted by AI flagging sus messages and even guesstimating how old someone is based on their activity patterns could prove to be crucial in combating Roblox child predators. It should also prove scalable, doing way more than a single vigilante or even anti-pedo Justice League could ever hope to accomplish.

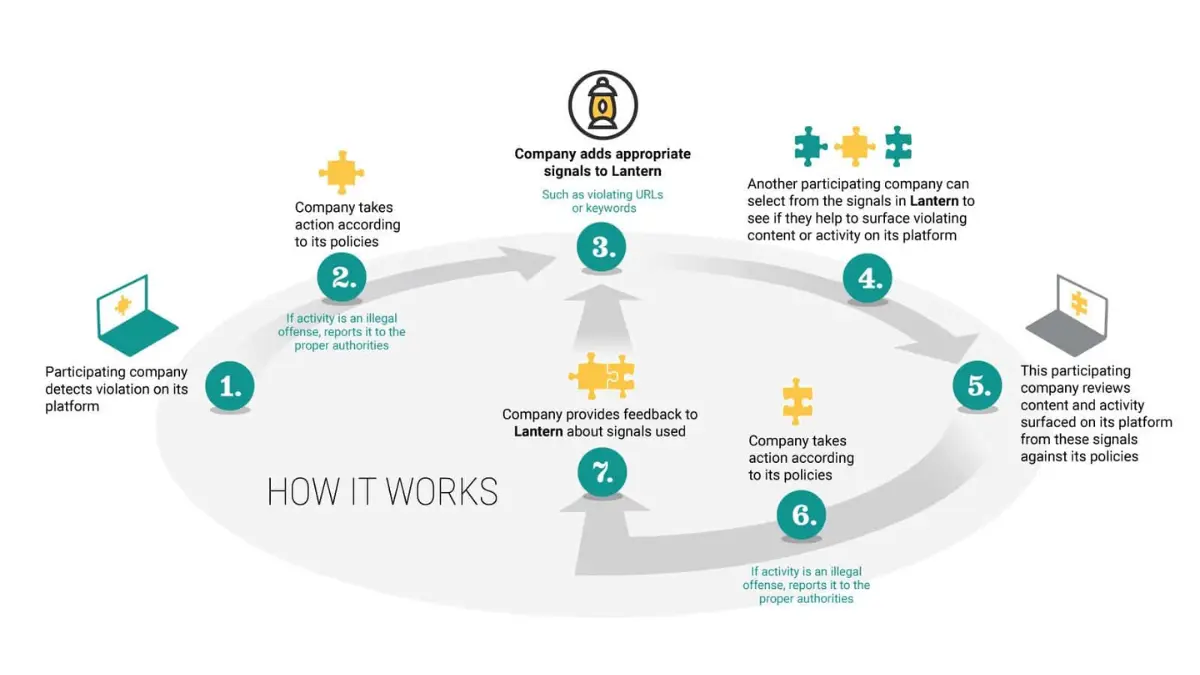

Roblox Corp also keeps collaborating with law enforcement proactively, like by developing and supporting trusted flagging systems that make it hard for suspected predators to hop between platforms. To that end, the company’s also plugged into industry groups like the Tech Coalition, Lantern (cross-platform signal sharing), and ROOST, where it open-sourced a voice-safety classifier.

More recently, Roblox started hiding 17+ content from child users’ search results and will soon stop players who haven’t verified their age with a government-issued ID from finding any such content altogether. This might help with the issue of its ecosystem making it too easy to stumble on age-inappropriate content, as noted in the Louisiana AG’s lawsuit.

As part of the same overhaul, the minimum age requirement for accessing all “Restricted” experiences will be raised from 17 to 18 later in 2025. And come September 30, Roblox will start disabling any unrated experiences unless their creators add a proper age label, closing off another loophole

To top it off, Roblox just announced that its age-estimation system will be expanded to all users, alongside a partnership with the International Age Rating Coalition (IARC) to strengthen global age and content ratings.

This means experiences will be aligned with the same global content ratings you see on the App Store, Google Play, and consoles. That should give parents a clearer idea of what their kids are playing, and give Roblox a stronger case when regulators ask how seriously it takes child safety.

But does it actually work?

It’s still too early to judge the true impact of Roblox’s recent policy changes aimed at combating child predators. For now, it’s fair to say they’ll help, but they’re no silver bullet.

The big jump in reporting volume could reflect better detection, or simply a backlog of issues finally getting counted. What these changes don’t tackle directly is the underlying issue: predators preying on children and avoiding detection.

A sophisticated early warning system might drive a predator off Roblox, but that doesn’t stop kids from following or engaging with them on Discord or other less-moderated spaces.

That’s where initiatives like Lantern come in, flagging potential offenders across platforms in a way that avoids burdening innocent users in cases of false positives. After all, no one’s going to jail just because a game miscalculated their age.

The real test will be whether police outcomes—namely, arrests and rescues—rise alongside Roblox’s reporting numbers, not just the report count itself. We have substantial data from the platform’s looser moderation era that started all the way in 2006, but very little so far from this new, stricter one.

Playground or hunting ground?

There’s a finite number of times you can be mentioned by the Department of Justice talking about child predators before parents start seeing your “child-friendly” product in a less flattering light.

Roblox is long past that threshold, with its current DOJ shoutout tally standing at 72, according to Google. These include multiple cases that made national news.

So, no Roblox after dinner, little Timmy. Cry all you want, but at least Chris Hansen won’t be showing up at your birthday party.

Of course, none of this means Roblox Corp is “fine” with predators lurking on its servers. The uncomfortable truth is simpler: anything massively popular with kids will attract predators.

The only thing that makes Roblox different is the sheer scale—tens of millions of minors logging in daily—and the platform’s constant struggle to balance openness and innovation with real safety.

The path forward isn’t easy, but it is obvious: more transparent moderation, more industry-wide collaboration, and an honest reckoning with how predators exploit Roblox’s social features.

Technology can help, but culture matters too. Parents, players, and the platform itself all share responsibility for making Roblox what it claims to be: a playground, not a hunting ground.