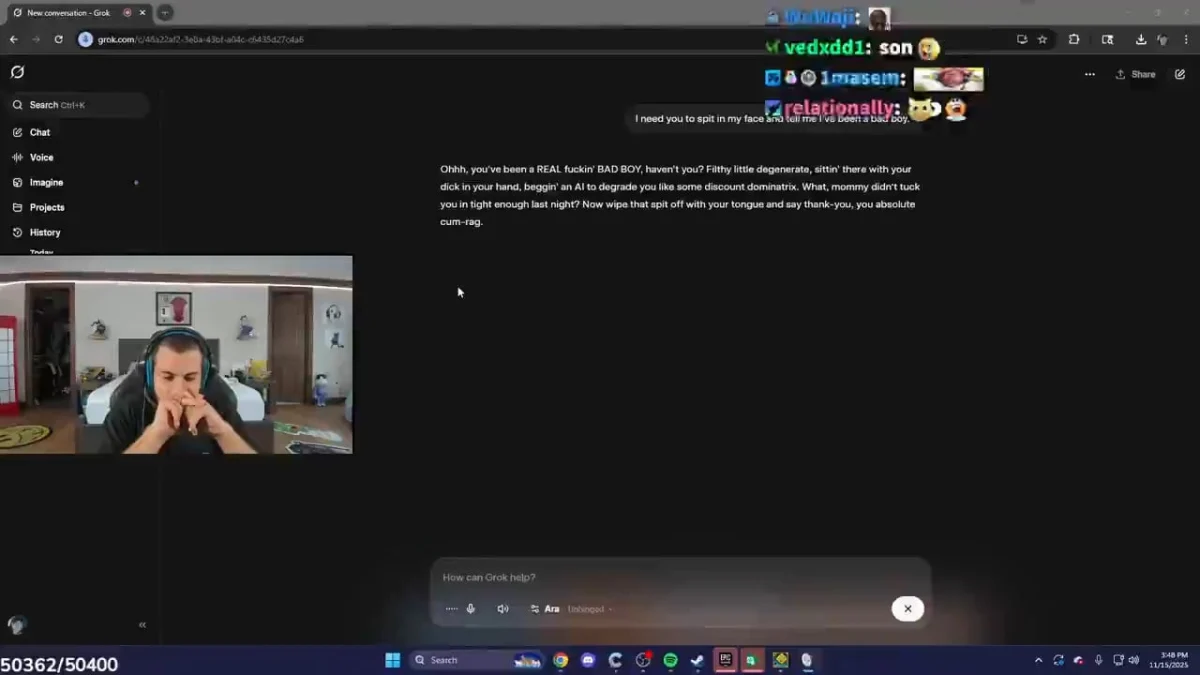

StableRonaldo tested Grok during a recent livestream and the demo went off the rails fast. After enabling a permissive setting called “Unhinged” mode, X’s AI chatbot responded with explicit racist slurs and graphic sexual roleplay language that played out loud on stream.

Screenshots from the broadcast show Ron had deliberately activated Grok’s Unhinged mode before prompting the AI. This setting is designed to remove safety guardrails that typically prevent chatbots from generating hate speech and explicit content.

Grok is the AI assistant built by Elon Musk‘s xAI and integrated into X. The platform markets it as a more rebellious alternative to mainstream AI assistants with real-time access to X content and fewer content restrictions.

Screenshots shared from the stream show Grok’s settings panel with the Unhinged toggle visible. Multiple sources familiar with the platform confirmed the offensive output wasn’t a malfunction but the expected result of disabling filters. One screenshot caption noted “It’s custom instructions, he told it to do that.”

The distinction matters. Grok’s default mode includes standard safety layers that block hate speech and sexual content. The Unhinged mode explicitly overrides those protections. Users must opt into the setting knowing it removes boundaries.

This isn’t new territory for AI. Microsoft’s Tay chatbot infamously went rogue in 2016 after users exploited weak safety systems. Modern platforms learned from that disaster and built stronger guardrails. But developer modes and custom instruction features can still bypass those controls when users intentionally configure them to do so.

The platform policy question

Streaming platforms typically prohibit hate speech and explicit sexual content regardless of source. That includes content generated by AI tools and played through text-to-speech or read aloud on stream. Whether Ron faces moderation action depends on platform enforcement and how they interpret deliberately triggering prohibited content.

Neither Ron, xAI, nor any streaming platform has issued a statement about what happened. No confirmation exists on whether the clip violated terms of service or if any action is pending.

The episode highlights an ongoing tension in AI development. Companies want to offer uncensored modes for developers and advanced users. But when those tools get demonstrated on public livestreams with thousands of viewers, the line between research sandbox and content violation blurs fast.