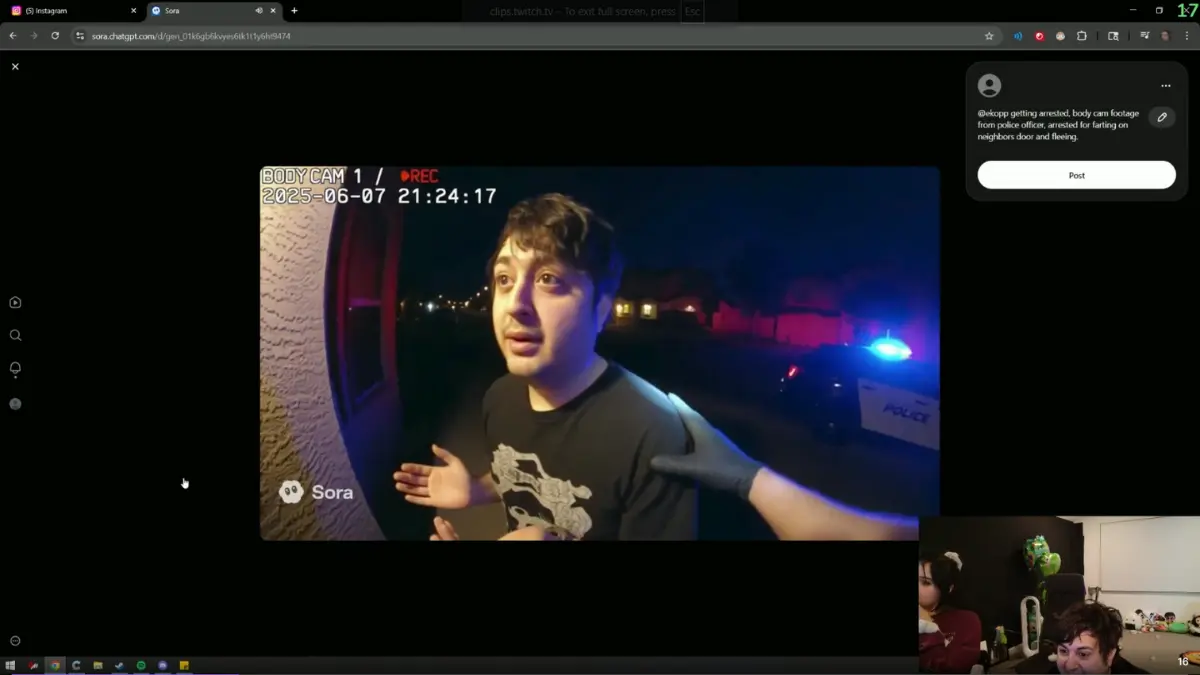

A video claiming to show police bodycam footage of Twitch streamer Pokelawls being handcuffed and arrested has gone viral across social media. The clip is not real. It’s entirely AI-generated.

The fake footage mimics the look and feel of authentic police bodycam video. It shows officers detaining someone designed to look and sound like Pokelawls. The video includes realistic camera shake, ambient audio, and proper color grading that matches real law enforcement footage.

The clip features comedic dialogue, including a memorable exchange where someone asks “For a fart?” This should have been a giveaway that the video was meant as a skit rather than a genuine documentation of a crime.

But the visual quality fooled many viewers at first glance. The video spread rapidly online, with many people initially believing they were watching real arrest footage.

Viewers quickly identified the video as AI-generated content. Comments pointed out that this is part of a recent wave of hyperrealistic “AI crime” videos circulating online. These clips use advanced text-to-video tools and deepfake techniques to create convincing fake scenarios.

Pokelawls is a Canadian Twitch variety streamer with over a million followers. He’s known for VRChat content, IRL streams, and collaborations with other creators. His streams often feature improvisational comedy and he has no known history of legal troubles that would match this supposed arrest.

Recent AI advances now allow creators to fabricate bodycam-style footage with realistic motion, lighting, and audio. These tools can model a person’s likeness and clone their voice using publicly available videos. The bodycam aesthetic adds false credibility because viewers associate this camera style with authentic documentation.

The video demonstrates growing challenges in verifying online content. Authentic bodycam footage almost always corresponds with public records, police statements, or local news reports. None of those exist in this case.

Platform policies on major social networks prohibit deceptive deepfakes, especially when they depict alleged crimes. It’s unclear whether the original video included any disclosure labels identifying it as AI-generated content.

This incident highlights a troubling trend. As AI video tools become more sophisticated, distinguishing real footage from synthetic content grows harder. Bodycam-style videos carry particular risk because they exploit viewer trust in law enforcement documentation.